Instagram Launch New Caption Warning Safety Feature

Instagram have recently launched a number of safety features to ensure time spent on the platform is safe, enjoyable and productive for its users. If you are affected by cyberbullying or anything touched on within this article, follow the links to our various support services or click on the blue logo icon at the bottom right of the screen to start using Cybersmile Assistant, our smart AI support assistant.

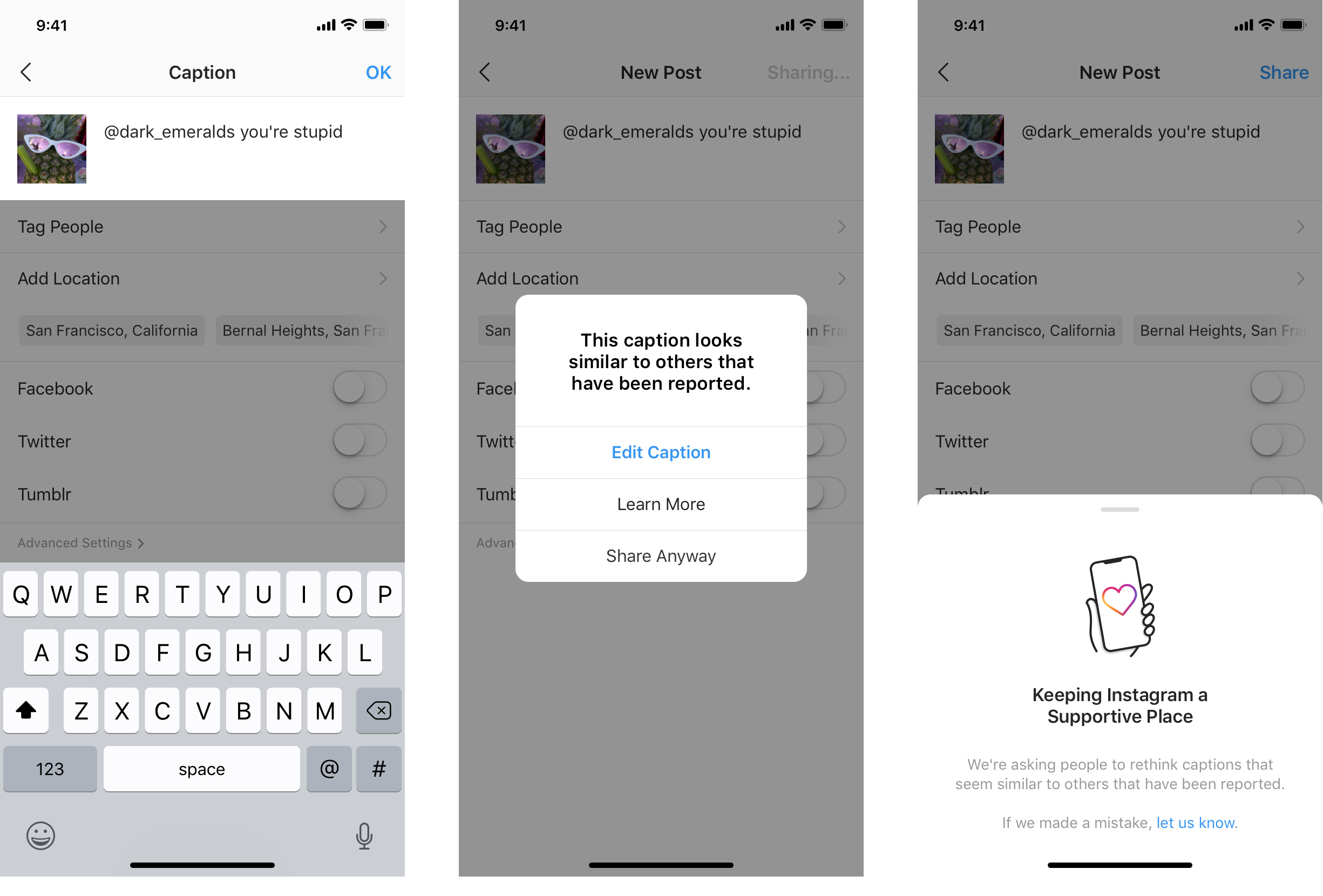

Instagram have announced the launch of a new safety feature that uses AI technology to tackle cyberbullying. The new Caption Warning feature uses AI to detect comments, phrases and captions that have previously been flagged as harmful, and alerts the user with an automated messaging advising them that the content they are about to post may be considered offensive.

After testing the tool earlier this year, Instagram found that the intervention encourages some people to undo their comment and share something less hurtful or offensive, after being provided with an opportunity to reflect on their comment.

“We should all consider the impact of our words, especially online where comments can be easily misinterpreted. Tools like Instagram’s Comment and Caption Warning are a useful way to encourage that behavior, before something is posted, rather than relying on reactive action to remove a hurtful comment after it’s been seen by others.”

Dan Raisbeck, Co-founder, The Cybersmile Foundation

The new feature is one of the first to approach the problem of online bullying from a preventative perspective, allowing pause for refection and time to reconsider content that could potentially harm or upset someone. Instagram’s AI can detect content that has been previously flagged as offensive, enabling it to respond to content that may not be recognized as harmful or offensive by users or moderators.

The launch of this new tool is part of a wider incentive by Instagram to address user safety on the platform. Earlier this year other safety features were introduced to protect Instagram users and ensure that time spent on the platform is safe and positive. The new features included a new ‘Restrict’ feature, which allows users to block or filter out unwanted interactions, without alerting the bully. This has been well received by users who can be reluctant to block or unfollow a bully through fear of retaliation, or the situation escalating.

If you are affected by any kind of online negativity, we can help you. Visit our Cyberbullying Help Center or click on the blue logo icon at the bottom right of the screen to open Cybersmile Assistant, our smart AI support assistant. For further information about Cybersmile and the work we do, please explore the following suggestions:

- Who Are Cybersmile?

- People We’ve Helped

- Cyberbullying And Online Abuse Help Center

- The Cybersmile Conversation (Allow a few seconds to load, but worth the wait!)

- World Leaders And Technology Companies Pledge To Fight Online Hate Speech

- Cybersmile And Claire’s Partner For Bullying Prevention Month Campaign

- Body Confidence Influencer Chessie King Announced As Official Cybersmile Ambassador And Media Spokesperson

- Studies Reveal Stark Contrast Between Teenage Male And Female Cyberbullying Incidents

- Cybersmile Campaigns

- Cybersmile Publishes National Banter Or Bullying? Research Report

- What We Do

- Cybersmile Gaming

- Catching Up With Zoe Sugg To Discuss Life Online

- Cybersmile And Instagram Announce Anti-Bullying Campaign: Banter or Bullying?

- Cybersmile Publishes National Millennial And Gen Z Study Of Attitudes And Perspectives Toward Social Media Platforms

- Florida Mandates Mental Health Learning In Schools

- Stop Cyberbullying Day 2019 Highlights

- Cybersmile And Rimmel Launch Groundbreaking AI Support Assistant

- Gaming Help Center

- Cybersmile Launch Interactive Digital Civility Learning Platform

- Understanding Social Media And Mental Health

- Cybersmile Wins Gold At Cannes Lions For Body Positivity Campaign

- Cybersmile To Make All Educational Workshops Completely Free For Everybody

- Corporate Partnership Program

- Cybersmile Newsroom

- Become A Cybersmile Sustainer

What do you think about this latest safety development by Instagram? Share your thoughts by contacting us or tweet us @CybersmileHQ.